Generating Datasets for Machine Learning using scikitlearn datasets

Generating datasets for training and testing machine learning algorithms can be an effective way to broaden your understanding of the nuances of different ML techniques. Whether it is for experimentation, learning a new technique, or assessing the impact of regularizers on different models, generating custom datasets can be a powerful tool for learning.

This note demonstrates how to use scikit-learn to generate datasets for regression, classification, and clustering for testing ideas and algorithms.

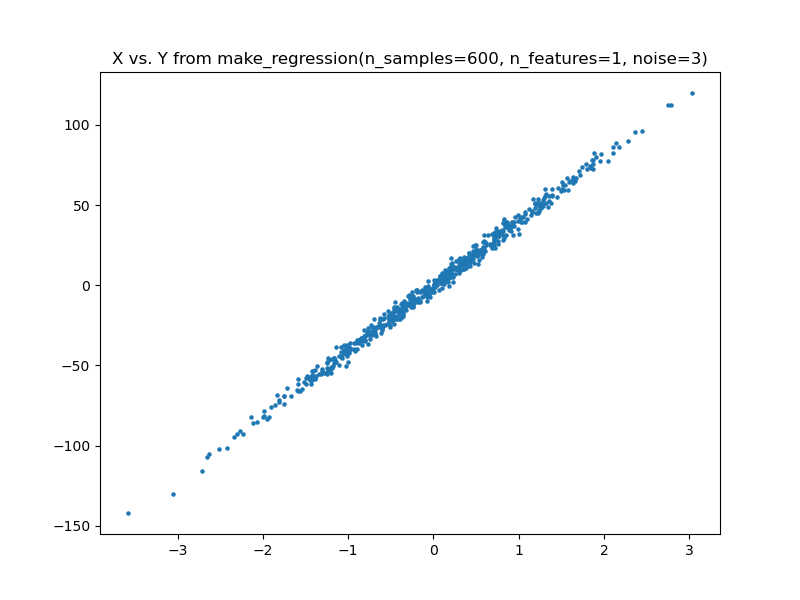

1. Regression Datasets with make_regressions

Regression techniques deal with predicting the quantification of an outcome variable. For example, given attributes of the house such as square footage, number of bedrooms, and number of bathrooms, a regression model would predict the price of the house in dollars against those features.

The make_regression() utility function, generates linearly related datasets that can be modeled using regression. In the example below, we develop a regression dataset with one feature and white noise set at 3.

from sklearn.datasets import make_regression

x_variables, y_outcome, coefficients = make_regression(n_samples=600, n_features=1, noise=3, coef=True)

x_variables[:10]array([[-0.33101357],

[ 1.34722527],

[ 1.0481165 ],

[-0.35973334],

[-0.18747076],

[ 0.73023134],

[-0.75271396],

[ 0.41991507],

[-0.12949288],

[-0.11679652]])import matplotlib.pyplot as plt

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

fig = plt.figure(figsize=(8,6))

plt.scatter( x_variables[:, 0], y_outcome, s=5)

plt.title('X vs. Y from make_regression(n_samples=600, n_features=1, noise=3)')

Setting the $coef=True$ parameters allows us to retrieve the coefficient of the linear regression used to generate the data.

coefficientsarray(40.44228661)

2. Classification Datasets with make_classification()

The make_classification takes a number of arguments here I focus on the core arguments.

- 1. n_samples: number of total samples

- 2. n_features: number of total features

- 3. n_informative: number of informative features

import numpy as np

import pandas as pd

from sklearn.datasets import make_classification

x_variables, y_outcome = make_classification(n_samples= 1000, n_features=5, n_classes=2, n_informative=3)

dataset = pd.DataFrame({ 'target': y_outcome })

dataset[[ 'x_0', 'x_1', 'x_2', 'x_3', 'x_4']] = x_variables

dataset.head()| target | x_0 | x_1 | x_2 | x_3 | x_4 | |

|---|---|---|---|---|---|---|

| 0 | 1 | 2.616537 | -0.966335 | 1.586885 | -1.883197 | -1.607736 |

| 1 | 0 | -0.660331 | 0.525760 | -0.345415 | 0.264541 | 0.361471 |

| 2 | 0 | -0.594647 | 1.207680 | -1.393054 | -1.425056 | -1.520799 |

| 3 | 1 | -0.414746 | 0.357305 | -1.041710 | -0.608961 | -0.949727 |

| 4 | 0 | -1.579912 | 0.408995 | -1.045008 | 1.219592 | 0.923696 |

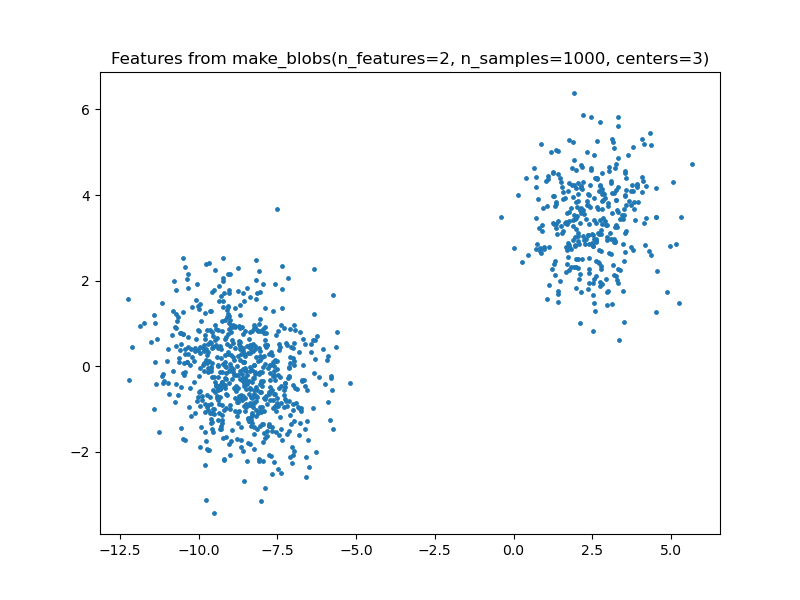

3. Clustering Datasets with make_blobs()

Another method for generating data with classes is make_blobs(). It is a simplified version of the make_classification() function and can therefore be used for n-classes data generation making it perfect for generating clustering datasets. The example below generated a dataset with 3 clusters (3 centers).

from sklearn.datasets import make_blobs

features, outcome = make_blobs(n_features=2, n_samples=1000, centers=3)fig = plt.figure(figsize=(8,6))

plt.scatter(features[:, 0], features[:, 1], s=6)

plt.title('Features from make_blobs(n_features=2, n_samples=1000, centers=3)')

Knowing how to generate datasets can be a handy tool in experimentation and learning how machine learning algorithms respond with parameter turning and regularization. Whether for testing or learning a new technique, they are a fun resource to play with data.